1. Introduction

As an Elasticsearch user, you may be familiar with its powerful capabilities to search, analyze, and store large amounts of structured and unstructured data. However, when it comes to searching high-dimensional vector data, Elasticsearch may not be the most efficient solution although it does provide a solution as well since its release – 7.0. Pinecone, a managed vector database service, is designed to handle vector search and similarity search use cases with ease. In this blog post, we’ll take a look at pinecone vs elasticsearch.

2. What is Pinecone?

Pinecone is a fully managed vector database service designed specifically for large-scale similarity search and machine learning applications. It allows you to search and retrieve the most similar items in a high-dimensional vector space efficiently. Pinecone is particularly useful for applications such as recommendation systems, image search, and natural language processing tasks, where similarity search plays a vital role.

In order to understand Pinecone, we’ll need to understand vector data.

2.1 Understanding Vector Data

Vector data is a representation of data in the form of numeric vectors, where each element of the vector represents a specific feature or attribute of the data. Vectors are mathematical objects that can be easily manipulated and compared using algebraic operations, making them a popular choice for representing complex data in machine learning and data analysis applications.

2.2 A Real Example of Vector Data: Word Embeddings

A practical example of vector data is word embeddings in natural language processing (NLP). Word embeddings are high-dimensional vector representations of words that capture their semantic meaning. These embeddings are generated by training machine learning models on large text corpora, such as the Word2Vec, GloVe, or BERT models.

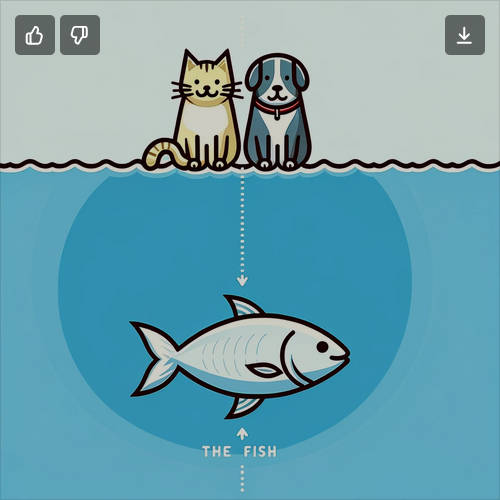

For instance, consider the following word embeddings with 3 dimensions (in practice, embeddings usually have 50-300 dimensions):

“cat”: [0.25, 0.48, -0.83]

“dog”: [0.22, 0.51, -0.81]

“fish”: [-0.1, 0.8, 0.3]

The word embeddings for “cat,” “dog,” and “fish” in the example are a simplified representation of how these words might be positioned in a high-dimensional vector space. In reality, the embeddings are derived by training machine learning models, like Word2Vec or GloVe, on large text corpora to learn the context in which words are used.

The model aims to capture the relationships between words based on their co-occurrence patterns. As a result, words that appear in similar contexts will have similar vector representations. In the example above, “cat” and “dog” are semantically similar because they are both common pets, so their vectors are closer in the vector space compared to the vector representing “fish,” which has a different meaning and usage.

It is important to note that the example provided is a simplified version of actual word embeddings. Real embeddings have much higher dimensionality (usually 50-300 dimensions), which allows them to capture more nuanced relationships between words. However, the general idea remains the same: words with similar meanings and contexts will have similar vector representations.

2.3 High-dimensional Vector Data

High-dimensional vector data refers to vectors with a large number of dimensions or features. In many real-world applications, such as natural language processing, image analysis, and recommendation systems, data can be represented as high-dimensional vectors.

As we discussed in the last section, word embedding can be high-dimensional. There is another example. An image can be represented as a vector where each dimension corresponds to the intensity of a specific color channel at a particular pixel. Considering the number of color channels, this could be high-dimensional as well.

Handling high-dimensional vector data efficiently is a challenging task due to the so-called “curse of dimensionality,” which states that as the dimensionality of the data increases, the volume of the search space grows exponentially, making it harder to find meaningful relationships and patterns in the data. This is where Pinecone comes in, offering a solution specifically designed to address these challenges.

3. Key Differences Between Pinecone and Elasticsearch

3.1 Pinecone vs Elasticsearch – Data Representation

Elasticsearch is a distributed search and analytics engine built on top of Apache Lucene. It excels in searching and analyzing text data and structured data, like JSON documents. Elasticsearch primarily uses an inverted index to perform full-text search efficiently.

On the other hand, Pinecone is a vector database designed to handle high-dimensional vector data. It leverages advanced algorithms and data structures to perform similarity searches in vector spaces efficiently. Pinecone’s core technology is based on approximate nearest neighbor (ANN) search, which allows for fast and scalable similarity search on large vector datasets.

3.2 Pinecone vs Elasticsearch – Search Capabilities

Elasticsearch offers powerful full-text search capabilities, including term-based and phrase-based matching, and support for complex Boolean queries. It also provides various text analysis tools, such as tokenizers, filters, and analyzers, to process and index text data effectively.

In contrast, Pinecone is designed for similarity search in high-dimensional vector spaces. It enables you to perform operations like k-nearest neighbor (k-NN) search, where you can find the k most similar items to a given query item. Pinecone is highly optimized for this type of search, providing low-latency and high-accuracy results even with very large datasets.

3.3 Pinecone vs Elasticsearch – Integration with Machine Learning

Elasticsearch can be extended with machine learning capabilities using plugins like the Elasticsearch Learning to Rank plugin or the Elasticsearch vector scoring plugin. However, these plugins are limited in their ability to handle high-dimensional vector data efficiently.

Pinecone, on the other hand, is built from the ground up with machine-learning applications in mind. It seamlessly integrates with popular machine learning frameworks, like TensorFlow and PyTorch, allowing you to use pre-trained models or train your models to generate vector embeddings. This integration makes it easy to incorporate advanced machine-learning techniques into your applications without significant engineering overhead.

4. Getting Started with Pinecone

To get started with Pinecone, you can sign up for a free account at https://www.pinecone.io/. Once you have an account, you can use the Pinecone Python library to interact with the Pinecone service. You can install the library using pip:

pip install pinecone-clientThen, you can create a new Pinecone service, index your vector data, and perform a similarity search using Pinecone’s API. For more information and examples, check out Pinecone’s official documentation.

5. Elasticsearch’s Vector Data Solution

The Elasticsearch team also realized the rise of vector databases, and it introduced a new datatype – dense vector – at release 7.0 in 2019. At the time, that feature was experimental and only supported a maximum dimension of 500. It gradually added support for this new datatype and was officially released at 7.6 in 2020. As of May-2023, the latest 8.7 release supports the maximum searchable (via its kNN API) dimension of 1,024 and the maximum non-searchable dimension of 2,048.

On the other hand, Pinecone support up to 20,000 dimensions. For reference, OpenAI’s latest embedding model gives a dimension of 1,536, which exceeds the capacity of indexed/searchable vectors of Elasticsearch.

Besides the number of dimensions, you might want to carefully evaluate your use case, performance requirement, similarity function requirement, etc to choose the right vector database for your application.

6. Conclusion

For Elasticsearch users looking to perform efficient similarity searches on high-dimensional vector data, Pinecone is a powerful alternative. Its focus on vector data, advanced algorithms, and seamless integration with machine learning frameworks make it an excellent choice for AI applications. You are always welcome to leave your comments to start any meaningful discussion.