The term Transformer has become almost synonymous with modern large language models (LLMs). But when people talk about “encoder-only,” “decoder-only,” or “encoder–decoder” architectures, they are drawing on terminology that predates the Transformer paper itself (Vaswani et al., 2017). This post explains where these terms come from, what they mean today, and how they apply to popular LLMs.

Origins of the Encoder–Decoder Terminology

The idea of separating neural networks into an encoder and a decoder comes from early sequence-to-sequence models in machine translation (2013–2014). For example:

- Cho et al. (2014) introduced the RNN Encoder–Decoder, where the encoder compressed a source sentence into a fixed-length vector, and the decoder generated a translation.

- Sutskever et al. (2014) extended this with LSTMs.

- Bahdanau et al. (2014) added attention, letting the decoder peek at the entire encoder sequence instead of a single vector.

When Transformers arrived in 2017, they kept this split but swapped RNNs for stacks of self-attention layers.

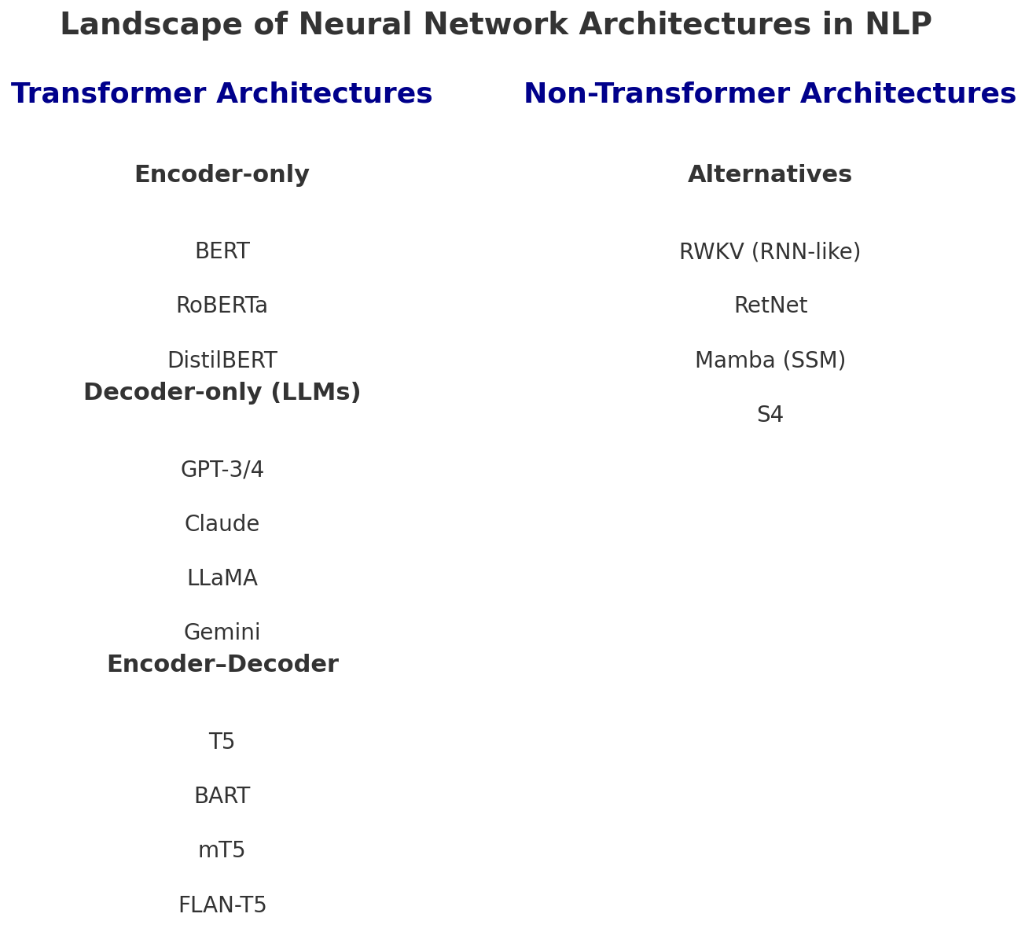

The Three Transformer Families

1. Encoder-Only Models

- Definition: Transformers that consist only of the encoder stack, using bidirectional self-attention (no causal mask).

- How They Work: Each token can attend to tokens on both sides, producing rich contextual embeddings.

- What They’re Good At: Representation learning, classification, named entity recognition, retrieval.

- Examples: BERT, RoBERTa, DistilBERT.

- Note on Terminology: Originally “encoder” meant just “compress input,” but here it has expanded to mean “bidirectional Transformer used for understanding.”

2. Decoder-Only Models

- Definition: Transformers that use only the decoder stack, with causal (masked) self-attention.

- How They Work: At each step, the model predicts a probability distribution over the next token, conditioned only on past tokens.

- What They’re Good At: Autoregressive text generation.

- Examples: GPT-3, GPT-4 (ChatGPT), Anthropic Claude, Google Gemini, Meta LLaMA, Mistral, Qwen.

- Note on Terminology: While they do “encode” context internally, they are called “decoder-only” because architecturally they use the Transformer’s decoder block with causal masking.

3. Encoder–Decoder Models

- Definition: Transformers that use both stacks: an encoder to process the input sequence, and a decoder to generate the output.

- How They Work: The encoder builds contextual embeddings of the input. The decoder attends to these embeddings and its own previously generated tokens to produce the output sequence.

- What They’re Good At: Sequence-to-sequence tasks like translation, summarization, and text-to-text modeling.

- Examples: T5, BART, FLAN-T5, mT5.

- Note on Terminology: This family is the closest to the original 2017 Transformer design for translation.

Comparison Table

| Architecture | Attention Style | Prediction Goal | Typical Use Cases | Popular Models |

|---|---|---|---|---|

| Encoder-only | Bidirectional | Contextual embeddings, not next-token prediction | Classification, retrieval, NER | BERT, RoBERTa |

| Decoder-only | Causal (masked) | Next-token probability distribution | Autoregressive text generation | GPT-3/4, Claude, Gemini, LLaMA, Qwen |

| Encoder–decoder | Bidirectional (encoder) + causal (decoder) | Conditional generation (output sequence given input) | Translation, summarization, seq2seq | T5, BART, FLAN-T5 |

Why the Terms Persist (and Drifted)

- Original Meaning: Encoder → process the source sequence; Decoder → generate the target sequence.

- Modern Usage: In the Transformer context, the community uses “encoder-only,” “decoder-only,” and “encoder–decoder” as architectural shorthand:

- Encoder-only = bidirectional, non-generative.

- Decoder-only = autoregressive, generative.

- Encoder–decoder = input-to-output mapping.

This is a slight deviation from the original definition, but it provides a convenient taxonomy for Transformer-based models.

Takeaway

All three types of Transformer architectures trace back to the encoder–decoder split from the early days of neural machine translation. Today, the most popular LLMs (ChatGPT, Claude, Gemini, LLaMA, etc.) are decoder-only Transformers, since their primary job is to generate text token by token. Encoder-only models like BERT dominate in understanding tasks, while encoder–decoder models like T5 remain powerful for structured sequence-to-sequence problems.

So when we use these terms today, it’s precise in the Transformer context, even though it has drifted slightly from its original meaning in early sequence-to-sequence RNNs.

You may refer to these resources for more in-depth learning: Transformer Architectures for Dummies – Part 1 (Encoder Only Models) and Transformer Architectures for Dummies – Part 2 (Decoder Only Architectures).